The Agentic Coder Survey: AI Adoption in Software Engineering

Software Engineering is changing at a pace never seen before. LLMs are becoming more capable by the month, new agentic coding tools are emerging on a daily basis, and social media is running hot with developers sharing their insights for being more productive with AI (see Agent Harnesses, Ralph, Gas Town, …).

Some developers adjust their workflow every few days to leverage the new tools. Others are overwhelmed by the rapid change and feel left behind.

I wanted to understand what AI adoption looked like in the real world, so I created a 3min survey about the agentic coder landscape, asking about AI adoption, workflows, and sentiments about the rapid changes in the industry.

Take SurveyThe Agentic Coder Spectrum: From Conversationalist to System Designer

I’m seeing a massive divergence in AI adoption among developers. While social media makes it seem like everyone is orchestrating multi-agent workflows with plans, harnesses, and feedback loops, most developers I talk to personally are far more pragmatic—using AI selectively where it helps them.

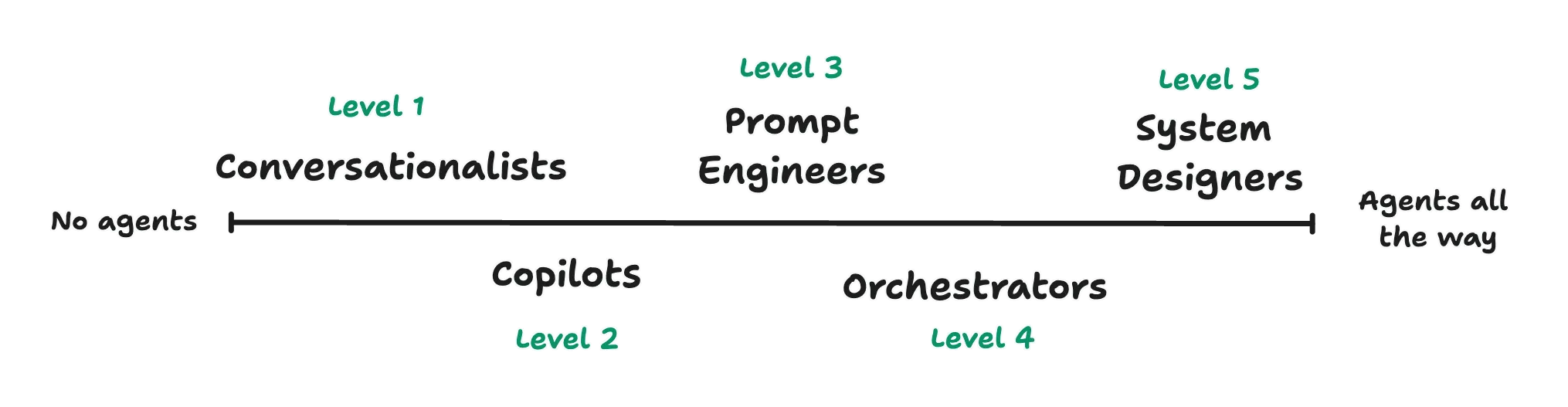

This made me think about a categorization for the adoption of agentic coding tools and I came up with a spectrum for agentic coders:

Note: This spectrum isn’t about judging what’s better or worse. It’s simply an observation of different adoption levels I saw in personal conversations with people from various developer communities, on social media and in the wider industry.

A note about the Gas Town spectrum

While gathering feedback for this article, @swyx pointed out that this spectrum looks similar to the one shared in Steve Yegge’s recent article Welcome to Gas Town:

- Stage 1: Zero or Near-Zero AI: maybe code completions, sometimes ask Chat questions

- Stage 2: Coding agent in IDE, permissions turned on. A narrow coding agent in a sidebar asks your permission to run tools.

- Stage 3: Agent in IDE, YOLO mode: Trust goes up. You turn off permissions, agent gets wider.

- Stage 4: In IDE, wide agent: Your agent gradually grows to fill the screen. Code is just for diffs.

- Stage 5: CLI, single agent. YOLO. Diffs scroll by. You may or may not look at them.

- Stage 6: CLI, multi-agent, YOLO. You regularly use 3 to 5 parallel instances. You are very fast.

- Stage 7: 10+ agents, hand-managed. You are starting to push the limits of hand-management.

- Stage 8: Building your own orchestrator. You are on the frontier, automating your workflow.

They’re similar in that they both describe AI/agent adoption levels, so I wanted to call out this prior work here. I still decided to keep my own spectrum for the purpose of this survey since I wanted to have less granular spectrum with expressive names for each level.

Following is a characterization of each level on the spectrum. Note that the borders may be fluent, but these levels should help gain a better understanding of where each developer falls in their AI adoption today.

Level 1: Conversationalists

Conversationalists use AI as a knowledgeable colleague they can consult. They ask questions, get explanations, and occasionally copy/paste code snippets, but their core workflow remains unchanged—they’re still writing code by hand without the help of agents.

Typical workflow

Ask questions, get guidance, write code manually or copy/paste AI-generated code.

Level 2: Copilots

Copilots have integrated AI directly into their development environment with tools like Cursor, GitHub Copilot, or Windsurf. They still write most of their code themselves (or copy/paste it), but lean on AI for autocomplete, boilerplate, and quick implementations while maintaining full control over the architecture and technical decisions.

Typical workflow

Use tab completion, copy/paste AI suggestions, single-agent workloads with manual oversight.

Level 3: Prompt Engineers

Prompt Engineers have shifted their primary workflow from writing code to describing what they want built. They spend most of their time crafting prompts, reviewing generated code, and making targeted fixes, occasionally dropping down to write code manually when AI struggles with specific problems.

Typical workflow

Plan new feature with agent, have agent execute, review code manually or with tests.

Level 4: Orchestrators

Orchestrators run multiple AI agents in parallel, treating different tools as specialized team members that each handle specific aspects of a project. They’ve become managers for their AI team, spending their time coordinating different tools and agents, reviewing their combined outputs, and integrating the pieces while rarely writing code themselves.

Typical workflow

Design feedback loops and tests so that agents can work on problems independently, occasionally review implementations.

Level 5: Systems Designers

Systems Designers have moved from crafting code to designing systems that write code. They’ve recognized that the outcome is defined by the process, not the model—a good enough model with the right process beats a better model with no process every time.

Typical workflow

Design higher-order systems and processes, define specifications and success criteria, build autonomous pipelines with verification gates.

Survey: Mapping the agentic coder landscape

One thing seems certain: The spectrum is widening rapidly which leads to a massive spread in developer output.

This divergence of output got me curious: where are we in terms of agentic coding and what does the adoption really look like?

So, I created a survey to map out the spectrum of agentic coders, understand the real adoption, and how developers perceive the changes. You can take it via the link below.

Take SurveyThe survey contains questions about:

- actual adoption of AI and agents in dev workflows

- what tools/models devs use

- how much devs pay for AI tools/subscriptions

- how devs feels about the shifts happening (excited, anxious, overwhelmed, …)

Please also share it within your company, friend circles, developer communities, and social media:

About the survey

The main goals of the survey is to understand where developers are in their AI adoption and how they feel about the AI-driven changes to software development.

How is the survey structured?

It is structured in three sections:

- AI adoption in development workflows (tool / model adoption, expenses, workflow, …)

- Developer sentiment about AI tools (self-assessment, emotions, pressure, …)

- Personal context (experience, compensation, social media usage, …)

Why this survey?

I spent the last nine years as a Developer Advocate watching the developer tooling landscape evolve, and I’ve never seen a shift this rapid (or this polarizing) as I did over the past year in relation to AI. I’m also noticing the extreme divergence of AI usage between influencers and experts on the one hand, and “normal” developers on the other.

I wanted to see data beyond the hype on social media and personal anecdotes. How does AI affect most developers in their day-to-day work? Do they feel excited, nervous, neutral or anxious about it? And where are things headed?

Note that this is my own independent research (driven by genuine curiosity), not funded by any vendor or affiliated with any company or product.

Where to find the results?

I will be publishing the results on my blog in March 2026.

Credits

Thanks to @schickling, @mattpocockuk, and @swyx for their feedback on the survey and helping me improve it.